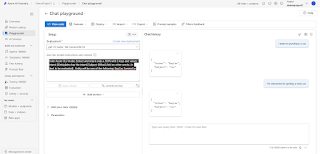

Microsoft Copilot is an AI generative assistant, designed to understand users requests in every day common spoken language.

This assistant can be custom tailored for a specific business to quickly provide answers to customers.

This eliminates the need for customers to browse a web site or use a search engine to get answers for specific products (i.e. pricing, sizes, etc.)

Instead, customers can now ask questions like "What colors are available?" and receive direct answers.

This provides every business with an edge over the competition.

Here's why investing in a custom Copilot could be one of the smartest moves for your business.

1. Enhanced Efficiency & Productivity

A custom Copilot is designed to integrate seamlessly into your website or app.

2. Business Specific Expertise

A custom Copilot can be trained with your business-specific knowledge, making it an expert in your particular business domain.

This would allow customers to ask it specific questions and get specific answers, in plain everyday English

3. Reach More Customers

A custom copilot can offer Speech Capabilities where questions can be asked verbally and answers provided via audio.

This would help reach visually or physically impaired customers.

4. Reach More International Customers

By using Language Translation, your custom Copilot can communicate with international customers in the language of their choice,

providing more reach for the foreign customer base. More Customers = More Sales.

5. Competitive Edge

By quickly providing answers to your customers' questions, a business can quickly gain an advantage over its competition.

No longer do customers need to use a search engine or browse a web site for answers on pricing & availability.

Instead, a quick question and answer with copilot will get the job done quickly leading to a better customer experience and potentially increased sales.

6. Increased Data Security & Compliance

Custom Copilots can be designed with specific security protocols and compliance measures that align with your business needs.

By controlling how data is processed, stored, and shared, companies can minimize risks associated with third-party AI services while ensuring compliance with regulatory requirements.

In addition, Microsoft's data policy on AI is "your data remains your data only".

7. Cost Savings & ROI

While a custom Copilot requires a single upfront investment in development, the long-term benefits far outweigh the costs.

It will quickly provide answers to customers without negatively impacting CSRs.

Businesses can reduce labor costs, improve service efficiency, and capitalize on AI-driven insights to boost profitability over time.

In a Nutshell…

A custom Copilot isn't just an AI tool—it's a strategic advantage. By tailoring AI to your business needs, you can drive efficiency, enhance customer experiences,

maintain security, and gain a competitive edge in your industry. In a world where technology is reshaping the way we work, a personalized AI solution could be the

key to unlocking the next level of success for your business.

Ready to explore the possibilities? Let's build a future where AI works for you, not just with you.